AlphaGo Zero Lite

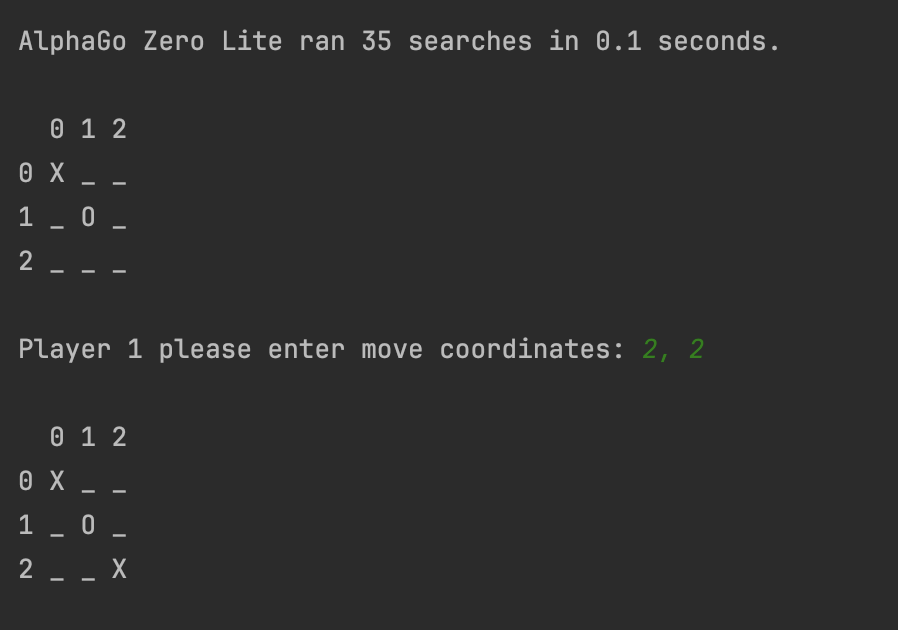

AlphaGo Zero was the second architectural evolution of AlphaGO, the first computer program to achieve superhuman performance in Go. AlphaGo Zero operates using a self-training neural network and a Monte Carlo Tree Search that has the search space pruned by the model. I am interested in studying how humans can learn from similar systems and implemented a lightweight version AlphaGo Zero for further explorations that can be run on a personal laptop. Currently the tool has a command-line interface and supports playing tic-tac-toe, but it should be possible to train the model on any solvable game with minimal changes. The GitHub repo can be explored here.